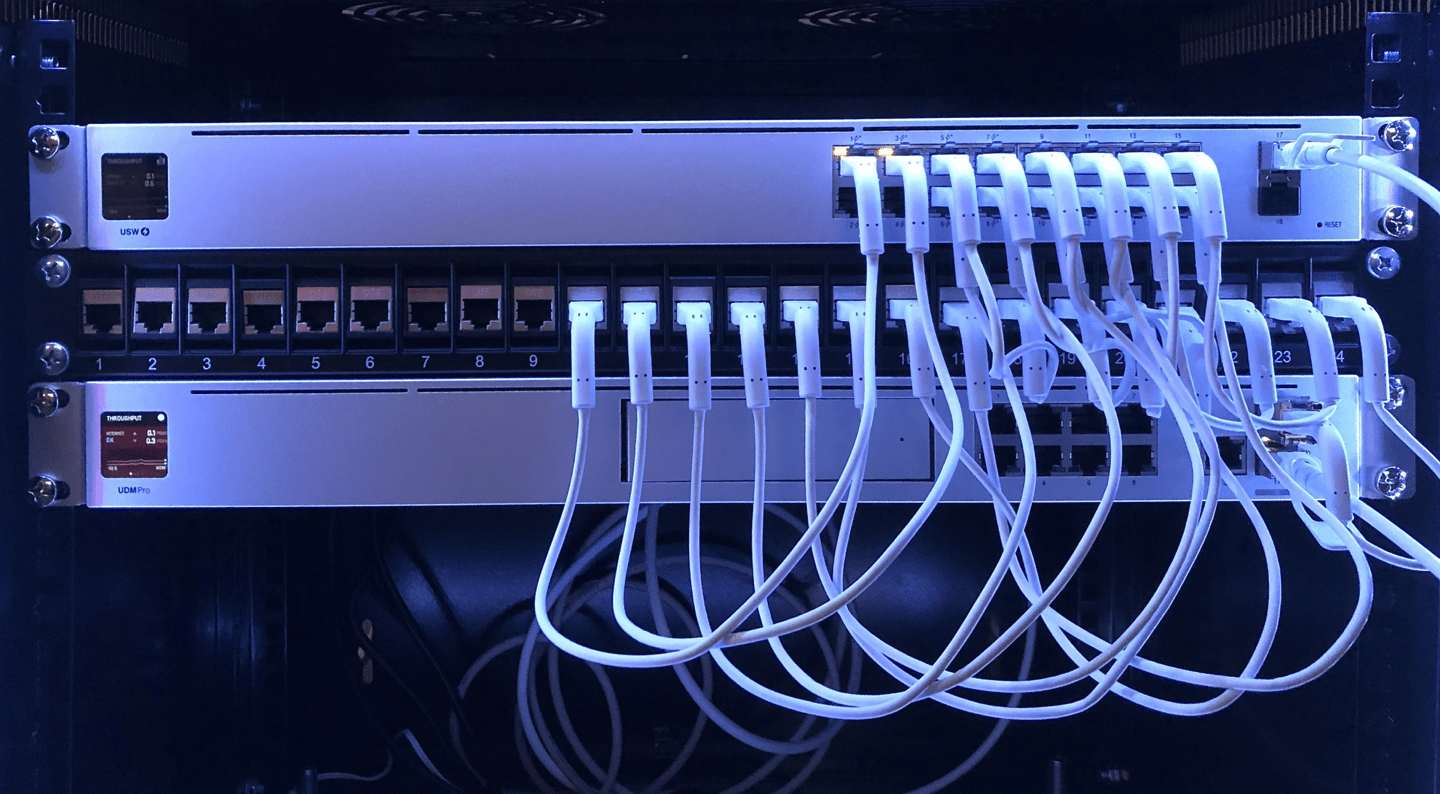

Ubiquiti UDM Pro running FRR BGP

BGP is the routing protocol of the Internet, and using it locally in your own network for things like DNS and other stateless service redundancy is fantastic.

Updated for Unifi OS 3.x for UDM/Pro/SE. Instructions are included for 1.x, 2.x and now 3.x.

I’ve successfully configured my Ubiquiti UDM Pro (running Unifi OS 3.0.20) to utilise Border Gateway Protocol for internal high availability of AnyCast DNS along with reverse proxy communication.

BGP is wonderful for this job as DNS clients do a horrible job at failing over to secondary servers, plus clustering reverse proxies with things like "heartbeat" adds significant complexity and monitoring requirements at the host side.

I guess a routing protocol like OSPF could also be used instead of BGP, although I've not tried it. Something like http://ddiguru.com/blog/anycast-dns-part-4-using-ospf-basic.

I stuck with an old USG Pro 4 for ages because it ran Edge-OS, which included the ability to use a custom config.gateway.json on the Unifi Controller site to define BGP and neighbors. But I wanted a UDM Pro so that I could enable IDS/IPS and get full line-rate gigabit to my ISP, and not be limited to around 300Mbps when doing this with the USG Pro 4. I didn’t upgrade until I was sure that I could get BGP going on the UDM.

Getting BGP working is pretty straightforward. For Unifi OS 1.x and 2.x it requires the creation of a podman container, but for 3.x FRRouting can be installed directly on the OS. (See the "fascinating link" at the very bottom of this post for running containers on 3.x, which I have not tried.)

The “other side” of the BGP neighbor configuration is not exhaustively included in this post, but in short I have three FreeIPA DNS servers that FRR routes traffic to a loopback address that the daemons listen on, which is “192.168.9.9”, and three HAProxy reverse proxies where FRR routes to “192.168.9.10”. A sample configuration is at the end of this post.

Usual Internet caveats apply. This worked for me, but if you break your router it's on you. A factory reset should get it going again, so make sure you have a backup of your UDM.

This all requires you to SSH to the UDM. For 1.x and 2.x, go to its web interface, select settings, advanced, enable the SSH slider and choose a password. The user name is root. For 3.x it's found at console settings, SSH check box. Then SSH into it using your favourite tool.

For Unifi OS 3.x: Install FRRouting using "on-boot"

(For earlier OS releases container creation instructions are later in this post.)

FRR can be directly installed by following the instructions at https://deb.frrouting.org/, however this will not persist when Unifi OS is updated. To work around this, the approach I use is to check whether FRR is installed at every boot. If it is, move on, but if not set it up again.

The configuration for FRR in /etc will persist upgrades, so only needs to be done once.

As an aside, the reason a FRRouting install will not persist is part of the Unifi OS update script, which deletes a bunch of the root filesystem...

log_msg "cleanup dir ${dir}"

for f in bin sbin lib lib64 usr etc/alternatives etc/default/unifi etc/udev etc/syslog-ng/syslog-ng.conf; do

rm -rf ${dir}/$f

done

# clean up var

find ${dir}/var ! -type d ! -path "*var/log*" -exec rm -f {} \;I have verified that the approach of using an on-boot method works like a champ, manually updating from 3.0.20 to the same release 3.0.20 using ubnt-systool fwupdate https://fw-download.ubnt.com/data/unifi-dream/6584-UDMPRO-3.0.20-eb525b977921438a9d0e239ae631d2a1.bin, which saw everything FRRouting put back nicely in a few minutes after update. So I'd recommend using the same method for anything else you want to install on a Unifi OS. Like nano. Ugh.

First install boostchicken’s excellent on-boot-script-2.x.

curl -fsL "https://raw.githubusercontent.com/unifi-utilities/unifios-utilities/HEAD/on-boot-script-2.x/remote_install.sh" | /bin/shNext, create an on-boot script /data/on_boot.d/10-onboot-frr.sh

#!/bin/bash

# If FRR is not installed then install and configure it

if ! command -v /usr/lib/frr/frrinit.sh &> /dev/null; then

echo "FRR could not be found"

# Handle instances of release change

rm /etc/apt/sources.list.d/frr.list

curl -s https://deb.frrouting.org/frr/keys.asc | apt-key add -

echo deb https://deb.frrouting.org/frr $(lsb_release -s -c) frr-stable | tee -a /etc/apt/sources.list.d/frr.list

# Install FRRouting

apt-get update && apt-get -y install --reinstall frr frr-pythontools

if [ $? -eq 0 ]; then

echo "Installation successful, updating configuration"

# Minimal config, existing will remain in /etc

echo > /etc/frr/vtysh.conf

rm /etc/frr/frr.conf

chown frr:frr /etc/frr/vtysh.conf

fi

service frr restart

# Install other nice-to-haves for config editing

apt-get -y install --reinstall nano

fiMake it executable and run it to install FRR. Running it a second time should do absolutely nothing.

chmod +x /data/on_boot.d/10-onboot-frr.sh

/data/on_boot.d/10-onboot-frr.shConfigure FRR

Edit the /etc/frr/daemons configuration file, enabling BGP by setting bgpd=yes.

zebra=no

bgpd=yes

ospfd=no

ospf6d=no

ripd=no

ripngd=no

isisd=no

pimd=no

ldpd=no

nhrpd=no

eigrpd=no

babeld=no

sharpd=no

staticd=no

pbrd=no

bfdd=no

fabricd=no

#

# If this option is set the /etc/init.d/frr script automatically loads

# the config via "vtysh -b" when the servers are started.

# Check /etc/pam.d/frr if you intend to use "vtysh"!

#

vtysh_enable=yes

zebra_options=" -s 90000000 --daemon -A 127.0.0.1"

bgpd_options=" --daemon -A 127.0.0.1"

ospfd_options=" --daemon -A 127.0.0.1"

ospf6d_options=" --daemon -A ::1"

ripd_options=" --daemon -A 127.0.0.1"

ripngd_options=" --daemon -A ::1"

isisd_options=" --daemon -A 127.0.0.1"

pimd_options=" --daemon -A 127.0.0.1"

ldpd_options=" --daemon -A 127.0.0.1"

nhrpd_options=" --daemon -A 127.0.0.1"

eigrpd_options=" --daemon -A 127.0.0.1"

babeld_options=" --daemon -A 127.0.0.1"

sharpd_options=" --daemon -A 127.0.0.1"

staticd_options=" --daemon -A 127.0.0.1"

pbrd_options=" --daemon -A 127.0.0.1"

bfdd_options=" --daemon -A 127.0.0.1"

fabricd_options=" --daemon -A 127.0.0.1"Create /etc/frr/bgpd.conf describing your peer groups and neighbors:

! -*- bgp -*-

!

hostname $UDMP_HOSTNAME

password zebra

frr defaults traditional

log file stdout

!

router bgp 65510

bgp ebgp-requires-policy

bgp router-id 192.168.10.1

maximum-paths 1

!

! Peer group for DNS

neighbor DNS peer-group

neighbor DNS remote-as 65511

neighbor DNS activate

neighbor DNS soft-reconfiguration inbound

neighbor DNS timers 15 45

neighbor DNS timers connect 15

!

! Peer group for reverse proxy

neighbor RP peer-group

neighbor RP remote-as 65512

neighbor RP activate

neighbor RP soft-reconfiguration inbound

neighbor RP timers 15 45

neighbor RP timers connect 15

!

! Neighbors for DNS

neighbor 192.168.10.31 peer-group DNS

neighbor 192.168.10.32 peer-group DNS

neighbor 192.168.10.33 peer-group DNS

!

! Neighbors for reverse proxy

neighbor 192.168.10.36 peer-group RP

neighbor 192.168.10.37 peer-group RP

neighbor 192.168.10.38 peer-group RP

address-family ipv4 unicast

redistribute connected

!

neighbor DNS activate

neighbor DNS route-map ALLOW-ALL in

neighbor DNS route-map ALLOW-ALL out

neighbor DNS next-hop-self

!

neighbor RP activate

neighbor RP route-map ALLOW-ALL in

neighbor RP route-map ALLOW-ALL out

neighbor RP next-hop-self

exit-address-family

!

route-map ALLOW-ALL permit 10

!

line vty

!Set ownership of bgpd.conf, and restart FRR.

chown frr:frr /etc/frr/bgpd.conf

service frr restartVerify that the BGP neighbors are up using vtysh -c 'show ip bgp'.

root@UDMPro:~# vtysh -c 'show ip bgp'

BGP table version is 7, local router ID is 192.168.10.1, vrf id 0

Default local pref 100, local AS 65510

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 180.150.52.0/22 0.0.0.0 0 32768 ?

*> 192.168.2.0/24 0.0.0.0 0 32768 ?

*> 192.168.8.0/24 0.0.0.0 0 32768 ?

*> 192.168.9.9/32 192.168.10.31 0 0 65511 i

* 192.168.10.32 0 0 65511 i

* 192.168.10.33 0 0 65511 i

*> 192.168.9.10/32 192.168.10.36 0 0 65512 i

* 192.168.10.37 0 0 65512 i

* 192.168.10.38 0 0 65512 i

*> 192.168.10.0/24 0.0.0.0 0 32768 ?

*> 192.168.12.0/24 0.0.0.0 0 32768 ?

Displayed 7 routes and 11 total pathsAnd you’re done!

To verify use netstat -ar.

If you don't see the routes you've got a problem. Very early Unifi OS 1.x required a kernel replacement (I've been doing this BGP thing a while ... I suggest you upgrade). Or if you left out maximum-paths 1 in the bgpd.conf that'd do it; show ip bgp would show "*=" as status for the 'inactive' hosts indicating multipath, instead of a simple "*". I have tried multipath and I couldn't make it work.

# netstat -ar

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

180.150.52.0 * 255.255.252.0 U 0 0 0 eth8

192.168.8.0 * 255.255.255.0 U 0 0 0 br8

192.168.9.9 192.168.10.31 255.255.255.255 UGH 0 0 0 br0

192.168.9.10 192.168.10.36 255.255.255.255 UGH 0 0 0 br0

192.168.10.0 * 255.255.255.0 U 0 0 0 br0

192.168.12.0 * 255.255.255.0 U 0 0 0 br2You can then modify your current DHCP/forwarders/reverse proxy/etc. configuration to point to the new BGP controlled addresses, and test that the active neighbor transitions by rebooting the neighbor box that is the current route (indicated by a ">" symbol in the "show ip bgp" list) then re-running the 'show ip bgp' command to see ">" move to a different host.

A network does not need to be defined in the controller for 192.168.9.0/24, as routing will work regardless because a /32 address is used.

Sample neighbor configuration

I don't include detailed configuration notes for each of the UDM’s neighbors, as everyone’s flavour of *nix differs. I’m a Rocky Linux bloke, which is a bug-for-bug alternative to Red Hat Enterprise Linux. The FRR config should be cross-platform, but implementing a loopback adapter will definitely differ by platform.

Install FRR on the neighbor and enable the BGP daemon in the /etc/frr/daemons configuration file, then configure BGP and a loopback interface.

Here is a sample from one of my DNS servers bgpd.conf, with routing to the loopback interface “lo”. (If set origin igp is omitted you will see the origin code on the UDM show as "?", or incomplete for show ip bgp, which makes kind-of sense, and took me a few months to figure out. Everything works, but something like that makes one's eye twitch. Set your origin.)

!

! Zebra configuration saved from vty

! 2020/10/20 23:24:34

!

frr version 7.4

frr defaults traditional

!

log syslog informational

!ipv6 forwarding

!service integrated-vtysh-config

!

!

router bgp 65511

bgp ebgp-requires-policy

bgp router-id 192.168.10.31

neighbor V4 peer-group

neighbor V4 remote-as 65510

neighbor 192.168.10.1 peer-group V4

!

address-family ipv4 unicast

redistribute connected

neighbor V4 route-map IMPORT in

neighbor V4 route-map EXPORT out

exit-address-family

!

route-map EXPORT deny 100

!

route-map EXPORT permit 1

match interface lo

set origin igp

!

route-map IMPORT deny 1

!

line vty

!And a sample network scripts loopback configuration. Adjust to taste for your flavour of *nix. /etc/sysconfig/network-scripts/ifcfg-lobgp

# Loopback for BGP DNS AnyCast

DEVICE=lo:0

BOOTPROTO=none

BROADCAST=192.168.9.9

IPADDR=192.168.9.9

NETMASK=255.255.255.255

NETWORK=192.168.9.9

ONBOOT=yesFor ifconfig I guess it'd be something like the command ifconfig lo:0 192.168.9.9 netmask 255.255.255.255.

To ensure DNS service failure doesn't impact things, I run a script on the server as a systemd unit that monitors local host name resolution. If a resolve fails then it shuts down FRR, and if it comes back up re-starts it. This allows BGP on the UDM to rapidly switch to a known good service should the failure be at the application layer, which in the case of FreeIPA upgrade does happen temporarily during dnf update.

#!/bin/bash

MAILTO="steve@company.com"

EXTERNAL="company.com" # Internet domain name to resolve

SMTP="smtp.in.company.com:25"

PRIFOR="8.8.8.8"

SECFOR="8.8.4.4"

logger -p daemon.notice -s "Started anycast DNS monitor"

FAILED="false"

FNOTIFY="false"

HOST=`hostname -f`

INIP=`/usr/bin/dig @localhost. A +short +timeout=1 +retry=5 $HOST`

EXIP=`/usr/bin/dig @localhost. A +short +timeout=1 +retry=5 $EXTERNAL`

while true

do

INDNSUP=`/usr/bin/dig @localhost. A +short +timeout=1 +retry=0 $HOST`

if [ "$INDNSUP" != "$INIP" ]; then

if [ "$FAILED" = "false" ]; then

logger -p daemon.warning -s "DNS is not responding on $HOST"

logger -p daemon.warning -s "Stopping anycast"

systemctl stop frr

echo "Anycast DNS is not responding on $HOST" | mailx -S smtp=$SMTP -s "Alert: DNS $HOST" $MAILTO

FAILED="true"

fi

else

if [ "$FAILED" = "true" ]; then

logger -p daemon.notice -s "DNS is responding again on $HOST, so starting anycast"

systemctl start frr

echo "Anycast DNS is responding again on $HOST" | mailx -S smtp=$SMTP -s "Resolved: DNS $HOST" $MAILTO

FAILED="false"

fi

fi

sleep 1

if [ "$FAILED" = "false" ]; then

# Check forwarders every minute

if [ $(date +%S) = "00" ]; then

PRIUP=`/usr/bin/dig @$PRIFOR A +short +timeout=1 $EXTERNAL`

SECUP=`/usr/bin/dig @$SECFOR A +short +timeout=1 $EXTERNAL`

if [ "$PRIUP" = "$EXIP" ] || [ "$SECUP" = "$EXIP" ]; then

# At least one forwarder is responding

if [ "$FNOTIFY" = "true" ]; then

logger -p daemon.notice -s "Forwarders responding again on $HOST"

echo "DNS forwarders are responding again on $HOST" | mailx -S smtp=$SMTP -s "Resolved: DNS $HOST forwarders" $MAILTO

FNOTIFY="false"

fi

else

if [ "$FNOTIFY" = "false" ]; then

logger -p daemon.warning -s "All DNS forwarders are not responding on $HOST"

echo "All DNS forwarders are not responding on $HOST" | mailx -S smtp=$SMTP -s "Alert: DNS $HOST forwarders" $MAILTO

FNOTIFY="true"

fi

fi

fi

fi

doneAnd the systemd unit:

[Unit]

Description=Check Anycast

After=syslog.target

After=network.target

After=frr.service

After=ipa.service

[Service]

Type=simple

Restart=on-failure

KillMode=control-group

ExecStart=/root/check_anycast.sh

ExecStop=/bin/kill -SIGTERM $MAINPID

RestartSec=10s

User=root

Group=root

[Install]

WantedBy=multi-user.targetA similar script checks the reverse proxies.

#!/bin/bash

MAILTO="steve@company.com"

SMTP="smtp.in.company.com:25"

FAILED="false"

HOST=`hostname -f`

while true

do

HAPROXYUP=`curl -I http://localhost:8080 2>/dev/null | head -n 1 | cut -d$' ' -f2`

if [ "$HAPROXYUP" != "401" ];

then

if [ "$FAILED" != "true" ];

then

echo "Stopping Anycast..."

systemctl stop frr

echo "HAPROXY is not responding on $HOST" | mailx -S smtp=$SMTP -s "Alert: Anycast HAPROXY" $MAILTO

FAILED="true"

fi

else

if [ "$FAILED" != "false" ];

then

echo "Starting Anycast..."

systemctl start frr

echo "HAPROXY is responding again on $HOST" | mailx -S smtp=$SMTP -s "Resolved: Anycast HAPROXY" $MAILTO

FAILED="false"

fi

fi

sleep 1

doneConclusion

BGP is the routing protocol of the Internet, and using it locally in your own internal network for things like DNS and other stateless service redundancy is excellent, as fail-over is lightning fast, and maintenance can usually be done on individual nodes without causing any disruption.

So while nowhere near as simple to do as on previous Ubiquiti routers, being able to now get it running on the new Dream Machine, thanks to the work of some brilliant open source sharers is just fantastic.

Cheers.

Extra: For Unifi OS 1.x/2.x, install FRR as a container

Early realeases of Unifi OS utilise containers to separate Unifi applications and make them easy to install, and we exploit this to install FRR on the router. Note that this is included for posterity. Upgrade to 3.x! It's just better.

If you can't upgrade, or really don't want to, install boostchicken’s on-boot-script, which will allow shell scripts to be executed when the UDM starts. This will enable FRR to install as a container and run. See https://github.com/boostchicken-dev/udm-utilities/blob/master/on-boot-script/README.md for details.

curl -fsL "https://raw.githubusercontent.com/boostchicken-dev/udm-utilities/HEAD/on-boot-script/remote_install.sh" | /bin/shAt some stage (I think Unifi OS 2.4.27+, but not certain) /mnt/data which was used on 1.x+ was moved to /data, but structure is otherwise identical. Alter scripts the below for /mnt/data instead of /data if you're on one of these ancient Unifi OS versions using /mnt/data. Or as I said, upgrade. Preferably to 3.x and skip this container palaver altogether...

For Unifi OS 2.x, podman may not be installed: Install it

If needed, I suggest following the instructions at https://github.com/unifi-utilities/unifios-utilities/tree/main/podman-install

Create On-Boot Script

Create the configuration script /data/on_boot.d/10-onboot-frr.sh for on-boot to execute. On-boot will execute in alphabetic order all scripts in the on_boot.d folder.

#!/usr/bin/env sh

DEBUG=${DEBUG:--d}

CONTAINER_NAME="frr"

if podman container exists ${CONTAINER_NAME}; then

podman start ${CONTAINER_NAME}

else

podman run --mount="type=bind,source=/data/$CONTAINER_NAME,destination=/etc/frr/" \

--name "$CONTAINER_NAME" \

--network=host \

--privileged \

--restart always \

$DEBUG \

docker.io/frrouting/frr:v8.1.0

fiDon’t forget to set execute permissions on the script.

chmod +x /data/on_boot.d/10-onboot-frr.shSet up FRR config location

Create the FRR configuration directory for the container, and a blank vtysh.conf configuration file to stop vtysh nagging that it doesn't have one.

mkdir /data/frr

touch /data/frr/vtysh.confCreate the /data/frr/daemons configuration file, enabling BGP, and also create a /data/frr/bgpd.conf, as described for the 3.x set up, then start the on-boot script to get it going.

Oh, and if you ever upgrade to Unifi OS 3.x this will all break because it won't be able to run containers. But see the fascinating link below.

For container show of BGP deets use podman exec frr -c 'show ip bgp'. And podman container ls ..., and podman restart ..., etc...

Honestly, containers work for me for kubernetes scaling from just one to a bajillion stateless pods, but I'm not a fan of routers running a few containers just because it's the latest thing. That said, sometimes folks only distribute their work as a container so you have to go there.

A fascinating link

If you really must go there on Unifi OS 3.x, I found an interesting article describing a way to get containers working on the SE. Fascinating.

I don't know if this would work for FRRouting, because routing table, but it just might.